What if Taylor Swift didn't own her own face?

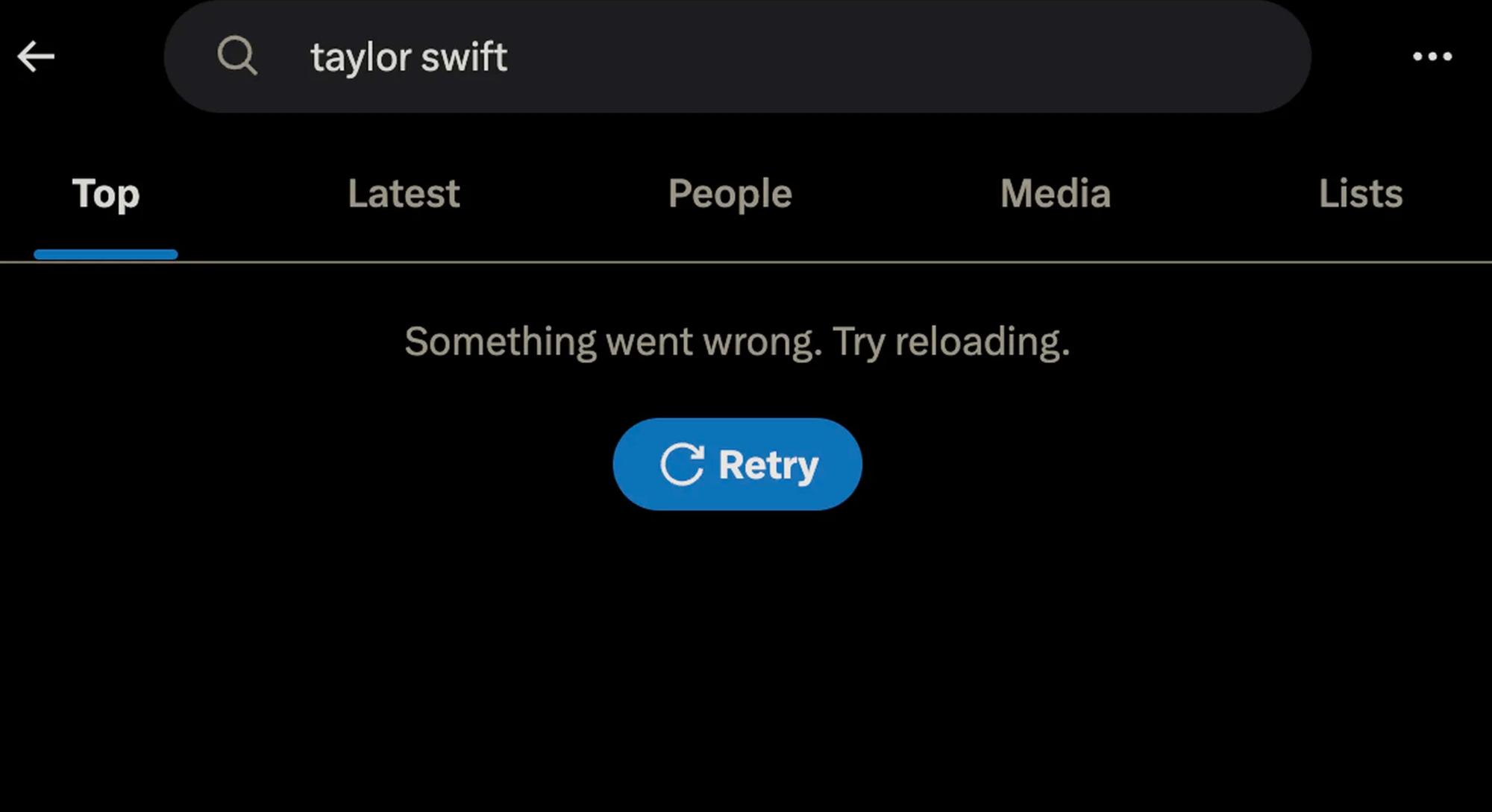

Last weekend, during a period of heightened Taylor Swift activity (she went to a football game and is also apparently a government psyop), Twitter shut down all searches for her name. Twitter’s skeleton crew set up the blanket block after deepfaked nude images of Swift spread across the site late last week; one image was viewed more than 45 million times.

This was only an act of content moderation in the crudest possible sense, because Twitter is dysfunctional (Elon Musk disassembled the moderation team; he is now building a “trust and safety center” after getting called into the principal’s office). But content moderation practices in general are having a hard time dealing with the influx of AI-generated, non-consensual sexual material. There are laws that deal with this, but in many states they haven't caught up to deepfakes.

So, enter the Taylor Swift-to-regulation pipeline. Bad news, though; politicians are using this news cycle to promote new, extremist copyright law that would let you sign over your likeness for the rest of your life (and 10 years after).

The No AI FRAUD Act, introduced earlier this month, is being described as a solution to the deepfake problem. It's not. It’s not innovative, either: it essentially creates something new for media companies to sue over.

No AI FRAUD establishes a transferable “property right” for voices and likenesses, and states that “people can only use someone's ‘digital depiction or digital voice replica’ in a ‘manner affecting interstate or foreign commerce’ if the individual agrees (in writing) to said use.” (Elizabeth Nolan Brown)

Problem one: People who sign away their rights under this act—which they will definitely be pressured to do—will lose control of their image and likeness.

That would be like Taylor Swift didn’t just sign away rights to her songs when she was a young artist, but also the right to her face. And also the right to ever sing with her voice against the wishes of whoever owns it. You can’t get a new face or voice like you can re-record your early albums. This seems awful for artists and human artistry at large, as does this next concern—

Under this bill, there’s nothing to prevent the corporations or Scooter Brauns who acquire likeness IP rights from creating AI-generated digital replicas of artists without the consent of the artist themselves.

No AI FRAUD, as well-intentioned as it may be, seems like a fast-track to exploitation by powerful media figures. Any artist who signs away their likeness rights could become trapped in a creative purgatory reminiscent of Britney Spears’ conservatorship. While the draft bill does contain some protections for agreements about use in advertisements or expressive works, it's not clear at all that those limits would apply to the actual transfer of rights.

Problem two. This bill doesn’t only threaten those who get offered a recording or publishing contract. It also attacks online speech in general via carveouts to Section 230, the Internet’s first amendment.

Under No AI FRAUD, websites that host AI-generated content could become liable for the copyright-infringing stuff their users post… even if they have no way of knowing it’s AI generated. This would lead to hard-and-fast crackdowns on online speech. Either DMCA takedown requests would go crazy, or platforms would start banning anything with a copyrightable “likeness.” Or both.

Taylor Swift can, and may, take legal action against Twitter: there are avenues that don’t require No AI FRAUD’s extreme IP rules. And if she doesn’t need No AI FRAUD, who would it actually help? A high schooler, a commission artist, an indy journalist? Unless they can tap into Swiftian resources, probably not. Stretching out the lawsuit arena isn’t going to benefit anyone except the people already at the top.

N.b. we left Substack

Hello world etc., we moved our newsletter to Ghost. Feel free to reply if anything looks weird, I'm trying to figure out where all the buttons are.

Member discussion