AI literally does not care about us

Over the past few months, news has slowly dripped out that UnitedHealth, America’s largest health insurer, has been denying Medicare claims with an AI algorithm known to screw up about 90% of the time. The algorithm, which UnitedHealth purchased in 2020, routinely overrides doctor recommendations for post-acute care, kicking patients out of rehab centers and costing them and their families tens of thousands of dollars.

The details are rough. One patient, Dale Tetzloff, suffered a stroke last October. His doctor recommended 100 days of inpatient recovery, but UnitedHealth’s algorithm cut off his coverage after only 20 days. Dale and his doctor appealed, but it went nowhere:

Tetzloff asked UnitedHealth and NaviHealth why they issued denials, and the companies “refused to provide any reason, stating that it is confidential,” according to the complaint. He and his wife spent $70,000 for his care. Tetzloff died in an assisted living facility this past October. (STAT)

The headline villain here is an algorithm, but it’d be a mistake to call this an AI story. Really, it’s a story about bureaucracy. And, as anthropologist David Graeber would say, bureaucracy is really about violence.

In 2006, Graeber delivered a talk that I’ve returned to again and again. He opens with a tale quite like Tetzhoff’s: Graeber’s mother had recently suffered a series of strokes, and he tries to qualify her for Medicaid and in-home care. His efforts are rebuffed by a succession of nurses, social workers, and notaries, each of whom discovers some infinitesimal flaw in this form or that. The situation is, to get this word out of the way, Kafkaesque.

I think this is a relatable experience for many of us. But Graeber’s point isn’t that the Social Security Administration sucks or that Medicaid functionaries are dumb. It’s that bureaucracy invariably pops up around situations that are already inherently violent.

In Tetzhoff’s case, the AI denial, the multiple appeals, the administrative hell I’m sure his family experienced during and after the fact, covered up a simple fact. UnitedHealth was revoking his access to healthcare—quite literally a life-or-death situation. Resisting wasn’t an option: If Tetzhoff had refused to leave the rehabilitation center after 20 days, had he chained himself to his bed in protest, he would have been “physically removed by armed men” (paraphrasing Graeber).

It could have been a human being who cut off Tetzhoff’s coverage. It ultimately doesn’t matter, because the underlying violence is the same. In these already-shitty situations, though, AI is a compounding factor—not because it makes the health insurer, unemployment office, or parole board intrinsically worse, but because it calcifies their logic.

Later in the talk, Graeber goes into something he calls “imaginative labor.” This is a gem of a concept, and the gist of it is that the people at the bottom of a system do a lot of work—a lot of labor—thinking about the people at the top. We try to figure out if our boss is having a bad day. We try to anticipate a traffic stop. We try to appease a teacher, or a parent. Authority figures, on the other hand, don’t tend speculate about the private minds of the disempowered. They don’t have to, because they’re the ones with the big stick.

AI algorithms push this inverted relationship to the extreme. We put a lot of effort into anticipating algorithmic decisions, but unlike a boss or a police officer or a claims analyst, the algorithm quite literally cannot care about us. When AI is injected into already-violent bureaucratic situations, it spawns one of the most lopsided power relations conceivable.

Algorithms have been creeping into our most boring, most violent institutions for some time now. They’re deciding if someone has an arrestable face, or if they deserve welfare benefits. The existential-risk crowd has it right—we do have to worry about powerful machines that can’t be reasoned with. But the robotic overlord, such as it is, has been inside of us all along.

A victory

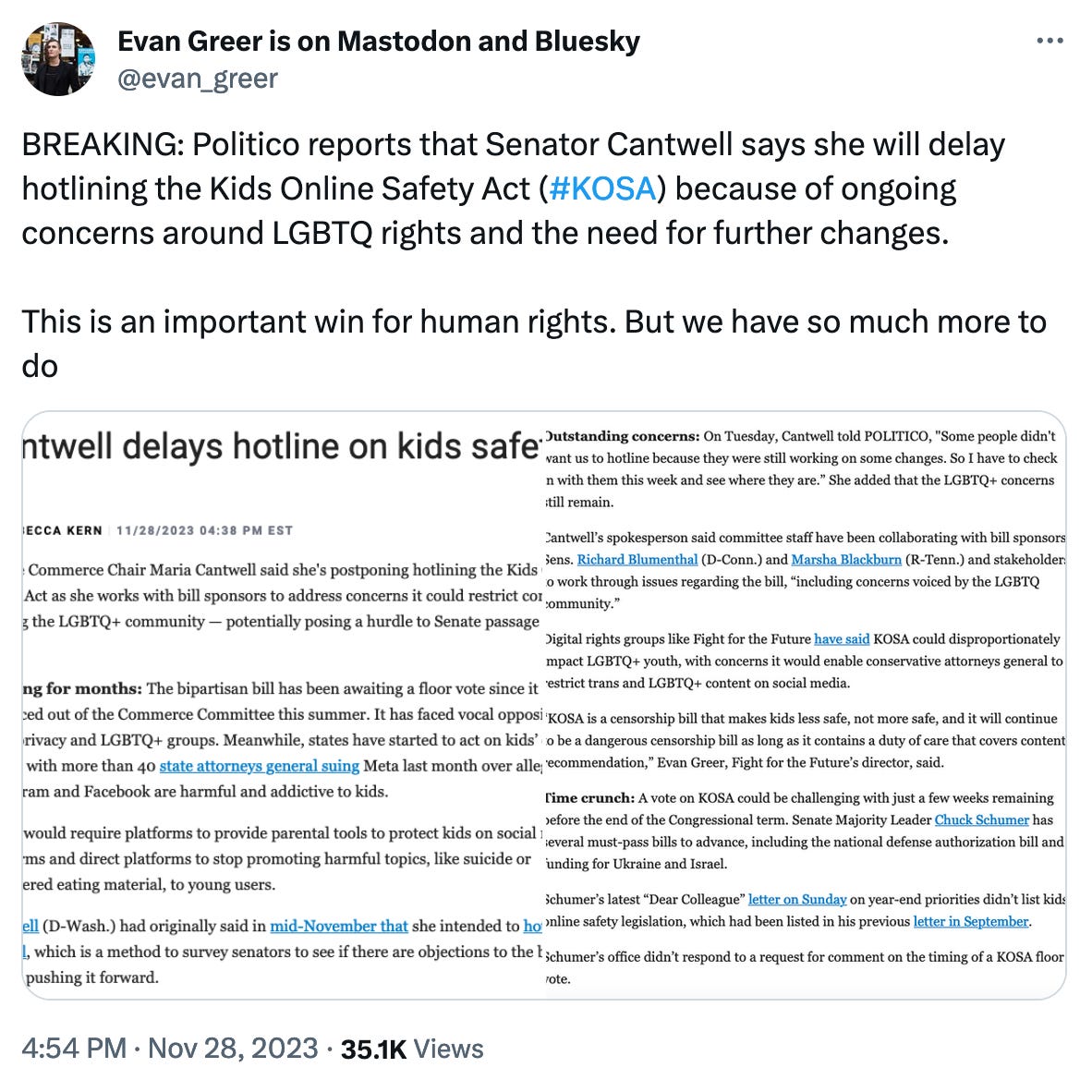

For months, we and a ton of other groups have been fighting the Kids Online Safety Act (KOSA), a bill that would drastically increase censorship and surveillance online. Last week, we got a really good swing in. Read more from Fight director Evan Greer:

And a good read

Deb Chachra just released a book I’m pretty excited about, How Infrastructure Works. I can’t stop thinking about (or recommending) her earlier essay, Care at Scale.

Member discussion